Read the latest Issue

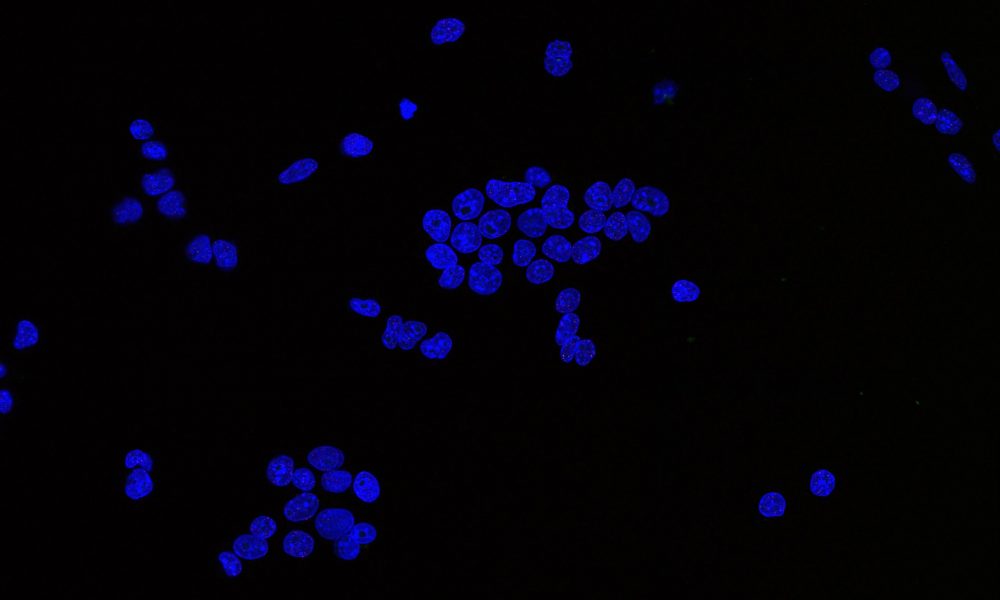

Virginie Uhlmann and her research group sit at the junction of mathematics, computer science, and biology. Their mission is to develop methods for analysing microscopy images, to ultimately help understand health, disease, and how life functions at a molecular level.

But working at the cutting edge of science and tech is no easy feat. In the last decade, the amount of microscopy data has increased dramatically and with it, the race to develop deep learning models to quantify the data has sped up.

There are, however, significant challenges getting in the way of fulfilling deep learning’s true potential for bioimage analysis. In their latest paper, published in IEEE Signal Processing magazine, Virginie Uhlmann, and her colleagues Laurène Donati and Daniel Sage from EPFL in Switzerland, explore some of them. We caught up with her to find out more.

One key problem is the lack of communication across the scientific communities concerned with the topic: the life scientists producing and studying the images and the image processing community, who develops deep learning methods. These folks are not talking to each other as much as they should.

This means there’s a disconnect between the people pushing the state of the art in method development and those using the methods in everyday life. The method developers are often interested in improving on what came before, which is great, but is not necessarily conducive to methods being designed and documented for reuse, and this is a problem.

Our paper in IEEE Signal Processing starts a conversation about what is needed to make deep learning methods easier to reuse, and what scientists who are not deep learning experts should consider when choosing a method for their study. With this, we’re hoping to bring these two communities closer together.

This is a long and complex conversation, and it’s worth mentioning that in our paper we focus specifically on supervised, pre-trained deep learning models.

Supervised deep learning – a definition

Supervised deep learning is a machine learning strategy allowing a computer to ‘learn’ possibly complex relationships between known pairs of inputs and outputs.

The computer can then predict the output of previously unseen inputs. Deep learning is used for applications in medical research (e.g. to distinguish healthy cells from cancer cells), to solving complex problems in biology (e.g. predict how proteins fold), to text generation and much more.

There are three areas where deep learning could have a big impact on bioimage analysis. The first is throughput and automation: moving from manual to more automated methods, with less human input, would mean we’re able to scale up and analyse a lot more images quicker, and gain more knowledge from them.

The second area is improving reproducibility. Classically, bioimage analysis requires a lot of manual intervention, for instance, when adjusting an algorithm’s parameters. But as the methods become more general, better-documented etc, reproducibility also improves.

Thirdly, and more importantly, bioimages are only one source of information on living systems, alongside genomics, proteomics etc. The next big challenge in biology is what we do with all these things we’ve computed, and how we assemble them into a representation that makes sense. This is a hugely complex task that will certainly require machine learning.

Talk to software developers and image processing experts to understand what their models are great at and what they might not be so suitable for. We’re all excited about deep learning but it’s a complicated field, so we have to work with the method developers to understand the potential and limitations of each algorithm.

We offer useful tips and good practice guides in our paper to help life scientists navigate this complex landscape step by step.

Think about the reusability of your models and try to understand what users care about. Also make sure your models are well-documented, clearly explained, and that the code is open source and publicly available. The impact of any deep learning model will be so much higher if its code and documentation are available for others to use and improve upon.

There’s an obvious need and appetite for reusing, fine-tuning, and improving deep learning models in bioimage analysis, and the opportunities are huge.

What we’re missing now are guidelines and standards set by the community on what information needs to be provided alongside a model so it can easily be reused.

Let’s take model zoos for example. These are websites that host a collection of curated code and pretrained models for a wide range of platforms and uses. Model zoos can be the perfect starting point for users who are not deep learning experts to identify relevant models and fine-tune them for their own application or dataset.

One great initiative still under development is the Bioimage Model Zoo, led by Anna Kreshuk, EMBL Group Leader and Wei Ouyang at KTH Royal Institute of Technology in Sweden. The project is driven by the open source image analysis tools community. It aims to provide a central repository for published deep learning models for a large range of bioimaging applications. The idea is to make available fully-documented models that are easy to train and interoperable – plug and play, if you will.

Another resource that was long overdue is the BioImage Archive, where researchers can submit their microscopy data and access data uploaded by others. The team behind the archive is doing a lot of work with bioimaging communities to create metadata standards to make the data FAIR – Findable, Accessible, Interoperable, and Reusable.

In the early days of machine learning, the dream was to have this system that you feed data into and then it can predict anything. But we’re understanding now that this is not going to happen because neural networks learn by adapting to different domains and problems. There is no one silver bullet, instead there is slow, collaborative, and sometimes tedious work to make the models as useful and open as possible. The only way forward for deep learning is a culture of reuse and sharing.

Looking for past print editions of EMBLetc.? Browse our archive, going back 20 years.

EMBLetc. archive