Read the latest Issue

What is 'normal' human variation? The 1000 Genomes Project, which now comes to an end, pushed knowledge forward and created technologies that will continue to be used to answer this question.

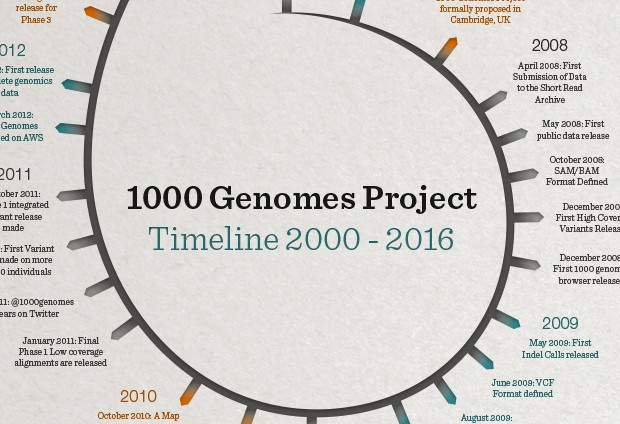

The 1000 Genomes Project, the most comprehensive fully open survey of human genetic variation ever performed, hit the headlines this week when scientists announced the final set of results from the eight-year international research effort. As well as exceeding its original aim (the team studied the DNA of more than 2500 people, instead of the 1000 originally envisaged), the project has given unprecedented insights into the genetic difference that makes each of us unique, and provided a baseline for studies into how genetic changes can cause disease.

Vital and impressive as these findings are, the EMBL scientists involved in the work say that the most important legacy of the project lies behind the headlines – in the methods and technological innovations that made the work possible. These methods have transformed how genetic and genomic research is done around the world. “With the exception of the Human Genome Project, the 1000 Genomes Project has had probably the largest impact on genomics and maybe on biology of any major project,” says Paul Flicek, senior scientist and team leader at the EBI.

First conceived in 2007, the 1000 Genomes Project set out to catalogue the differences, or variations, in the genetic instructions, or genomes, of different people in different populations around the world. At the time, the Human Genome Project had produced the first sequence of the whole human genome, but this was based on DNA from a handful of individuals. And a project known as the HapMap Project was under way to look for common differences in single DNA “letters” between people in the hope of discovering new insights into human variation and disease. The huge expense of DNA sequencing, however, meant that the HapMap used a very different, cheaper method to hunt for these differences. While it found millions of these single changes, it didn’t have the ability to sample human genetic variation in detail.

By 2007, sequencing technology had advanced sufficiently to make the mass sequencing of human genomes merely incredibly difficult, rather than impossibly expensive. Sequencing involves breaking up the genome into lots of pieces and then repeatedly reading sections of these pieces with the help of dedicated sequencing machines. Using computer software, scientists reassemble these pieces, or “reads” into the right order, by seeing how the ends of the sequences they had read overlap. This is a bit like ripping up several copies of a long, complex musical score – say, a Mahler symphony – and then trying to put the symphony back together by painstakingly reading the melody on each piece and looking for phrases at the beginning and end of each piece that match. Older sequencing technologies produced relatively long reads and enabled the Human Genome Project to complete the first draft of the whole human genome in 2000. But these technologies were time-consuming and extremely expensive. By the mid-2000s, however, new, faster and cheaper methods of DNA sequencing were being developed. Although this made the sequencing of lots of genomes feasible, the “reads” produced by the new methods were much shorter than the older ones – the equivalent of a few bars of symphonic score instead of long stretches of melody. This made assembling sequence much harder than before.

File formats are almost as important as electricity – it’s what powers everything

The first challenge for the members of the 1000 Genomes Project was to design ways of storing the sequence information in an electronic form, or file format, that would allow bioinformatics software to perform this extremely demanding computational challenge, known as alignment. Having succeeded, they then had to design a second format that would allow scientists to store and analyse the millions of differences in DNA sequences that exist between the genomes of different people. This had to be sufficiently flexible, yet also standardised, to allow researchers to make valid comparisons between them and extract meaningful information about human genetic variation.

The project came up with a file structure called Variant Call Format (VCF), which has since become the standard for all large genome projects. “Things like file formats are definitely on the un-sexy side, but they turn out to be almost as important as electricity – it’s what powers everything,” says Flicek. Also key was efficient logistics management by bioinformatics coordinator Laura Clarke to ensure that the deluge of data didn’t overwhelm the EBI’s resources.

These formats have since become standard in the field. “In the long term, the probably most important thing the project did is that it largely invented all of the technologies used in large-scale next-generation sequencing and in sequencing the genomes of individual people,” says Flicek. Another strength of the project was the fact that its data were publicly released as soon as it was available. “The real magic of the 1000 Genomes Project is that the data is completely open. So it can be used in all sorts of different ways,” says Flicek.

As the project progressed, it continued to innovate and respond to developments in DNA sequencing. With the help of new software developed by Jan Korbel’s team at EMBL Heidelberg, as well as in collaborating institutions, the 1000 Genomes team was able to study another kind of human genetic variation, one that accounts for most of the difference between individuals and also plays a key role in diseases such as cancer and inherited disease.

This variation is known as structural variation, and involves much larger sections of the genome than the small changes that had been studied before. “It’s relatively easy to identify single letter differences,” says Korbel. “That is different from large-scale changes.” Such changes include inversions, where long sections of sequence become reversed, deletions, where chunks of DNA are missing, and duplications, where sections of sequence are copied and inserted into the genome.

Even though these variations involve large areas of the genome (a page or two of musical score, instead of individual notes or bars), they are surprisingly hard to spot. This is partly down to the biology of the variations: they often occur in areas of the genome where the sequence of DNA letters is very repetitive. Such areas are notoriously difficult to sequence. What’s more, the variations often result in quite complex changes in the DNA sequence at their start and end points. “There’s typically some extra sequence missing, or extra sequence inserted, which makes it very, very challenging, if you don’t know what you’re looking for, to find these things,” says Korbel.

Again, the length of the sequencing reads presented problems: they were too short to easily reveal complex DNA sequence changes. To overcome this, Tobias Rausch, a bioinformatician in Korbel’s team, designed a new computational method called DELLY that could spot structural variation in short read data. Also key was the emergence of a new form of DNA sequencing, called single molecule real-time sequencing, which produces longer reads. Thanks to these innovations, the 1000 Genomes team was able to identify eight kinds of structural variations and assess how often they crop up in different populations.

To understand what effects structural variants and single DNA “letter”, or single-base pair, changes have on the individual, the team looked at the expression level, a measure of gene activity, of genes near the variations. This involved developing more accurate methods that could integrate different types of genetic variations to study their functional impact, a task that fell to Oliver Stegle and his team at EMBL-EBI. “There has been a community effort in refining statistical genetics technologies to fully exploit the data from the 1000 Genomes Project,” says Stegle.

This revealed that structural changes were up to 50 times more likely to alter gene function than SNPs, underlining structural variation as a major factor in what makes individuals differ from each other. The complex patterns of correlation between genetic variants meant that working out whether a variant seen in a segment of DNA was having an effect, or simply happened to sit near another one that did was far from easy. “Teasing that apart has been quite a challenge,” says Stegle.

The real magic of the 1000 Genomes Project is that the data is completely open.

Such discoveries and newly developed methods will act as invaluable resources for other genomics projects searching for genetic causes of human diseases says Korbel, as it will help them work out whether a variant they observe in a patient is likely to cause disease, or whether it is part of the normal genetic variation seen in that population. Such projects include the UK’s 100,000 Genomes Project, an ambitious undertaking to sequence the genomes of people with common and rare diseases, and the Pan-Cancer Analysis of Whole Genomes Project, to which Korbel and his team are contributing their expertise on structural variations and on germline genetics, to understand more about how cancers develop.

Although the 1000 Genomes Project is now officially complete, there is a need to continue to develop and add data contributed by other researchers to further expand understanding of human variation. There are yet more layers of complexity to add, including ‘epigenetic’ changes that affect gene activity – the equivalent of symbols added to a score to indicate how loud or soft the music should be played – and differences in gene activity between different cell types – which parts of the orchestra play which parts of the melody. All of which will help scientists build up a clearer picture of how variations in genomic themes help to make each of us unique.

Looking for past print editions of EMBLetc.? Browse our archive, going back 20 years.

EMBLetc. archive