EMBL’s IT Teams – saving electricity, costs, and carbon

Rupert Lück, Jessica Klemeier, Jure Pečar, Remi Pinck, Eduard Avetisyan and Tim Dyce also contributed to this article.

Over the years, EMBL has seen an enormous increase in the computational data it manages. Advances in detector technologies, sequencing, and omics fields, as well as the opening of the new Imaging Centre, will all likely lead to continued exponential growth in data volume, approximately doubling every 18 to 24 months.

As data volume continues to grow, so does the need for increased analysis capacity. Sharing large data quantities across EMBL sites or within international scientific consortia (along the lines of the Data Science transversal theme in the ‘Molecules to Ecosystems’ programme) will continue to pose enormous demands on the scalability of the laboratory’s IT infrastructure. This includes compute power, AI, cloud services, different data storage types, and networking.

As we strive to become a sustainable organisation, we are meeting the challenge of having to balance an increasing requirement for energy-intensive data management and the need to significantly reduce energy use to reduce our carbon footprint. It is safe to say that large-scale data management in the life sciences, facilitated through technological advances such as AlphaFold or new imaging technology, will be at the heart of much of the exciting research and new service opportunities at EMBL in the years to come.

Electricity consumption and IT

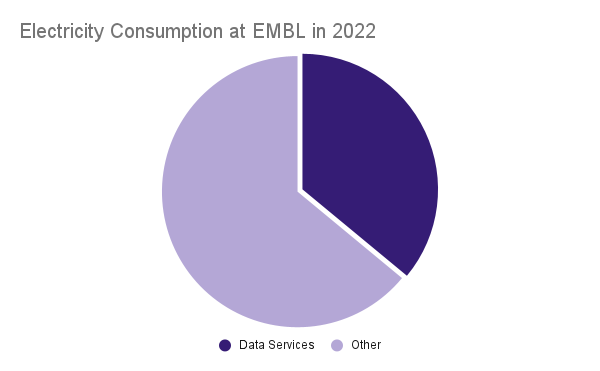

Even before accounting for the expected growth in data services, EMBL Data Centres are already the single biggest consumer of electricity across EMBL. In 2022, the data centres in Heidelberg, Hinxton (on-campus and external), Grenoble, and Hamburg together consumed 10.6 million kWh – the same amount of electricity as that consumed by 2,100 three-person households in Germany. It accounts for 36% of all the electricity EMBL consumes.

Such consumption comes with a large financial and environmental impact. In 2022, Data services at EMBL were estimated to cost €1.8 million in electricity and had a carbon footprint of 2,530 tCO2e.

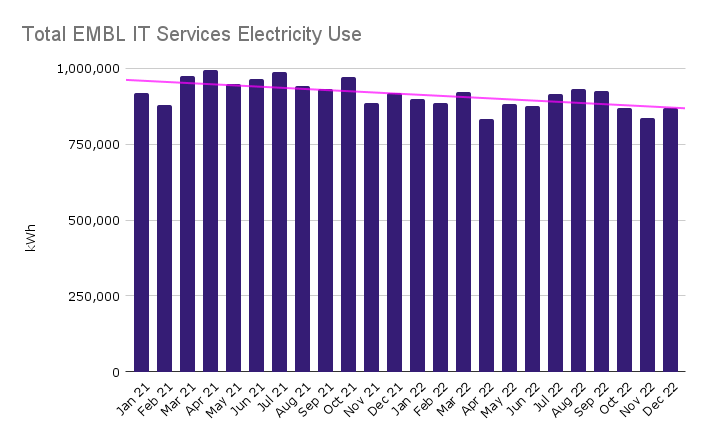

With these figures in mind, it is vital that EMBL operates its scientific data management in the most efficient way possible to support the organisation’s ambitions to be a more sustainable organisation. The good news is that the IT Services Teams across our sites have been working on this for some time and in 2022, we have seen some really impressive results, including a reduction of electricity use by 11% compared to 2021 as shown in this chart.

Parallel paths to reducing energy usage in Heidelberg

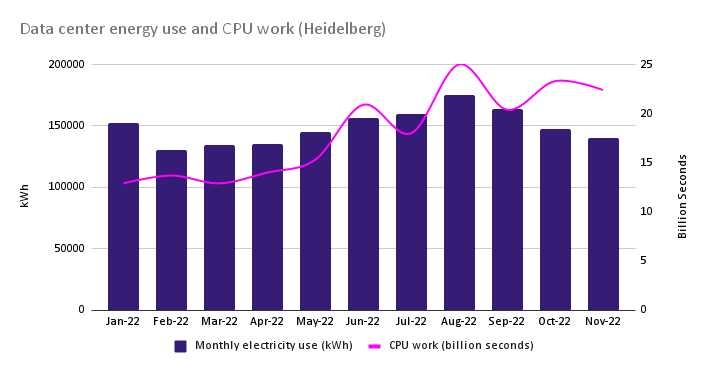

In Heidelberg, our data centre supports research on campus as well as at our other sites. The high-performance compute (HPC) services support the many different workloads of 400 researchers from 90 groups across EMBL. This can include, for example, piecing together the data from imaging services to turn hundreds of thousands of 2D image slices into a beautiful 3D model of a protein complex. It is obvious that complex scientific datasets require a lot of data storage, which, in Heidelberg, totals to a current capacity of over 100 petabytes of scientific data – a continuously growing figure.

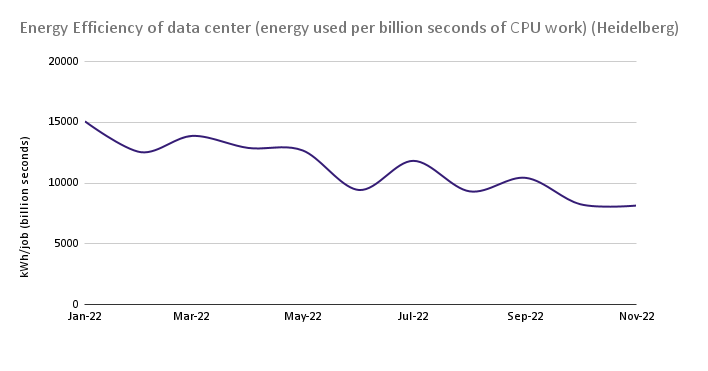

To reduce energy costs, Jure Pečar from the IT team in Heidelberg has implemented two effective initiatives. First, Jure observed that the dynamic nature of compute workloads associated with EMBL research means that not all of the available resources are always in use. However, even while idle, compute nodes still consume power to stay awake and available.

Our researchers don’t typically require these nodes to be available within milliseconds and can afford to wait a minute or two for them. So, Jure implemented a simple but effective strategy – automatically powering off these nodes after an idle period and powering them back up when demand re-appears.

In October, the team tackled the next challenge. Inspired by the latest generation of CPUs which have an ‘ECO-mode’, the team mimicked this on our current machines that run on previous generation CPUs. Part of this change was setting up a limit to the maximum frequency these CPUs can operate at. This reduces the electricity consumed by the CPU’s but also means the units operate at lower temperature, lowering the demand on the data centre cooling systems. While previously, we had to cool down the data centre air supply to 21°C, now the team can operate the room at 24°C. Heat is taken out of the data centre via chilled water, previously held at a temperature of 12°C. Thanks to the mimicked CPU ECO-mode, the water temperature can now be increased to 16°C even during summertime, further reducing the load on the data centre cooling system and decreasing the amount of electrical power it consumes.

Overall, these strategic changes have resulted in immediate significant energy savings related to the data centre, as highlighted in the charts below showing that significantly less electricity is consumed per billion seconds of CPU work. Jure and the IT Services team expect that a similar strategy could be implemented for the 200 GPUs in the Heidelberg data centre (which use specialised graphics processor cards critical in supporting EMBL’s massive AI workloads) after testing the possible impact.

Optimising the energy consumption of the HPC cluster in Hamburg

EMBL Hamburg’s IT team, led by Eduard Avetisyan, has implemented similar measures to Heidelberg, including a workflow to automatically shut down the servers not occupied by calculations in the High Performance Computing (HPC) cluster in Hamburg and automatically turn these back on once there were user jobs submitted. These optimisations result in energy savings of up to 100 kWh per day. Further optimisation is planned with the replacement of old batch nodes with more efficient high-performance nodes. The shutdown of the idling nodes is being implemented on a second HPC cluster in Hamburg, dedicated to the processing of the beamline data. Along with the shutdown of the idling nodes, the cooling of the server rooms has also been adjusted to allow higher air temperatures.

Optimising energy usage by EMBL-EBI’s data services

The EMBL-EBI data services are by far the biggest data service at EMBL, responsible for consuming over 8,000,000 kWh of electricity per year and over 1,500 tonnes of CO2 alone. In Hinxton, the team led by Tim Dyce has been reducing the environmental impact of EMBL-EBI’s data services for the last three years with impressive results.

Did you know?

Power Use Effectiveness (PUE) is the ratio of the power used by the IT equipment against the non IT equipment (cooling/lighting/security etc). The lower the PUE, the more efficient the data centre.

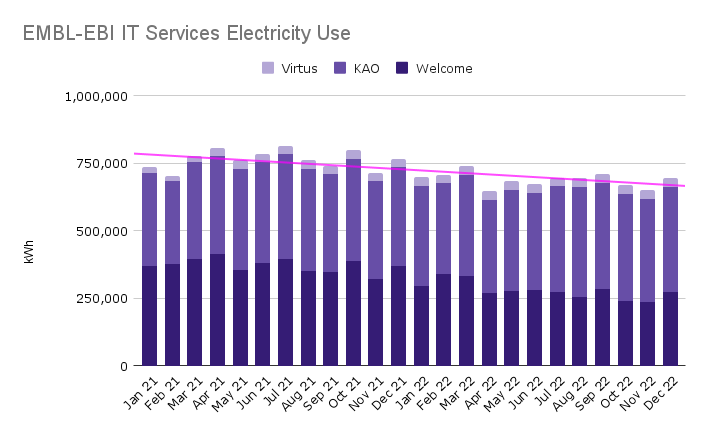

The team utilises the services of three third party data centres – the original one on the Wellcome Genome Campus, the Virtus centre in Slough, and most recently, Kao in Harlow. The Kao data centre is extremely energy efficient, with a PUE factor of 1.25 which compares favourably to a global average of 1.57.

Along with moving data to more efficient centres, EMBL-EBI’s IT Services teams have also worked directly with users and research groups to migrate and consolidate their data and computational activity onto newer, more power-efficient platforms. Through this migration, many petabytes of data have been identified as no longer required, and the deletion of this data has resulted in immediate and ongoing reductions in electricity use.

Moving our services from the less efficient centres to Kao and consolidating data is a huge ongoing task for Tim’s team, but the results in terms of energy savings are already clear, as shown on the chart below. The constant reduction in energy use is obvious, and has resulted in a 10% reduction in electricity consumption in 2022.

Free Cooling in Grenoble

In Grenoble, reducing the environmental impact of IT Services was on the agenda back in 2017 when all of the HPC compute systems were transferred into a container and placed outside the centre, in the open. According to Remi Pinck, who leads the Grenoble IT service, this had two major environmental benefits. First, when the weather is suitable (as it often is in Grenoble), large fans blow fresh air from outside through the container, keeping the servers cool enough without the need for any additional cooling systems.

This ‘free-cooling’ is the lowest impact cooling system available. When outside temperatures are not suitable, like in the summer, the container uses an adiabatic cooling system, which uses the thermodynamic characteristics of evaporating water to cool down the hot summer air. This system is less energy intensive than traditional systems and importantly, doesn’t rely on refrigerants. Many refrigerants, if leaked, have a much higher global warming potential than carbon dioxide.

The role or researchers

Using the computational and storage resources can be a large part of an individual scientist’s environmental impact. We must all be cognisant of the scale of the demand we put on our data centres and the impact this has in terms of energy, carbon, and operating costs. If you are looking for helpful tips to make your own computing more sustainable, EMBL-EBI’s very own Dr. Alex Bateman co-authored this paper that lists 10 simple steps towards this goal. For a wider view of the steps needed to reduce the impact of data services, this paper introduces seven principles for environmentally sustainable computational science.